-

QUALIFICATIONS

- For Linguists Worldwide

- For UK Public Services

- Preparation

- Policies & Regulation

-

MEMBERSHIP

- Join CIOL

- Membership grades

- NEW for Language Lovers

- Chartered Linguist

- Already a member?

- Professional conduct

- Business & Corporate Partners

-

ASSESSMENTS

- For Second Language Speakers

- English as a Second Language

-

EVENTS & TRAINING

- CPD, Webinars & Training

- CIOL Conference Season 2025

- Events & Networks

- CIOL Mentoring

-

NEWS & VOICES

- News & Voices

- CIOL eNews

- CIOL Awards

- The Linguist

- Jobs & Ads

-

RESOURCES

- For Translators & Interpreters

- For Universities & Students

- Standards & Norms

- CIOL & AI

- All Party Parliamentary Group

- In the UK

- UK Public Services

- Find-a-Linguist

Embrace the machine

By Jonathan Downie

As remote interpreting platforms start to offer AI services, Jonathan Downie outlines how interpreters might respond

Conference interpreting was in shock. At the start of 2023, KUDO, a leading remote interpreting platform, announced that they had launched “the World’s first fully integrated artificial intelligence speech translator”. Many interpreters were furious. It seemed that a platform built to offer them work had now created a service that would take work away. Despite demos and reassurances, doubts remained.

Later, another remote interpreting platform, Interprefy, released their own machine interpreting solution. This time, the response was much more muted. But now the precedent has been set. The days when human and machine interpreting were completely separate are over. But what does this mean for human interpreters and how we should adjust?

Back to the facts

To understand what this means for human interpreters, we need to know a bit about how machine interpreting works. Machine interpreting uses one of two models. The first is the cascade model. This takes in speech, converts it to text, passes the text through machine translation, and then reads out the translation through automatic speech synthesis. The second is the rarer end-to-end model. This takes in sound, analyses it and then uses that to create sounds in the other language. There is no written stage.

The cascade model ignores emotion, intonation, accent, speed, volume and emphasis. It may be able to handle tonal languages but anything that cannot be represented in simple text is discarded. Cascade model systems are therefore very poor in situations that need emotional sensitivity, and clever uses of tone of voice, sarcasm, humour or timing. End-to-end models can, at least in principle, get over most of those hurdles. In theory, anything that can be heard can be processed. These models might also perform better with accents and faster speech.

Neither model can check if people understand the output, but they can be customised for different clients and fields. Neither model will do anything to adjust to social context, such as who is speaking to whom, differences in status and the emotional resonance of what is going on. Yet both promise to handle numbers, names and specialist terminology as well as, if not better than, most trained humans.

In short, machine interpreting will soon beat humans at any kind of terminological and numerical accuracy we might care to measure. We will still beat them at customising our work for the audience, reading the room, speaking beautifully and pausing naturally.

Understanding client attitudes

It should come as no surprise that the one video of a test of machine interpreting under semi-realistic conditions showed exactly what we might expect. In a video for Wired last year,1 two interpreters found that, while KUDO’s system was excellent at picking up specific terms, it did a poor job of knowing how to prioritise information and tended to find some unnatural turns of phrase.

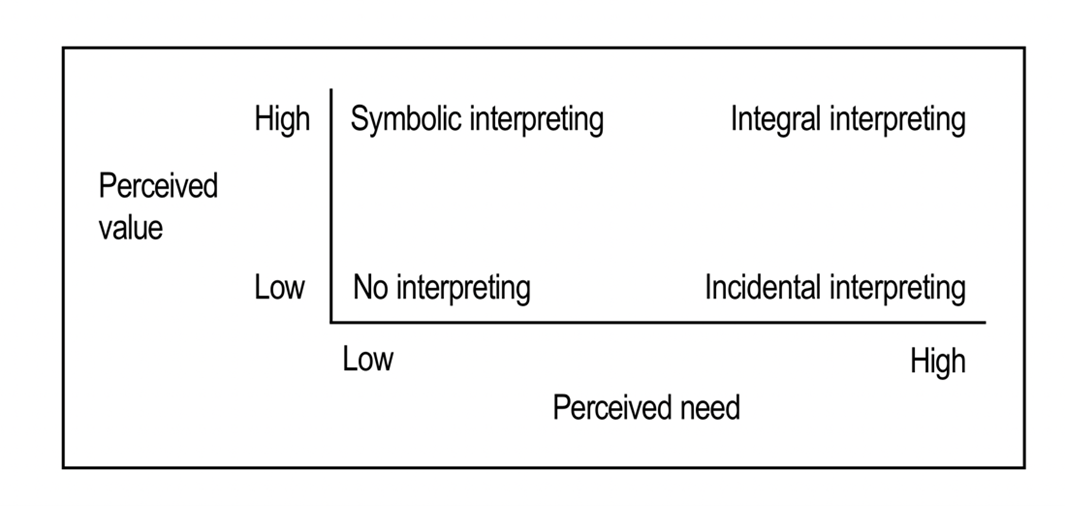

The overall result was that, while machine interpreting would do well enough as a stop-gap, it wasn’t reliable as a human replacement when it really mattered. But this gives us less reason to be joyful than we might think. This kind of nuanced analysis is foreign to most interpreting clients. We might happily talk about intonation, cultural nuance and terminology; very few of our clients do. To learn how clients think, we can use a simple model for classifying clients:2

Some clients think that they need interpreting but wish they didn’t. They might hire interpreters to get a job done but they have no intention of working out how to integrate interpreting better into their workflows. These are the incidental interpreting clients – those who think we should just be able to turn up, sit in the booth, work and then go home, all with a minimum of fuss.

Then there are clients who have a wonderful view of how powerful or politically important it would be to have interpreters. They hire us but do not actually use their headsets as they all speak the same languages anyway. This is symbolic interpreting. For those clients, as long as we look the part and are happy to appear to be working, it’s all good.

Finally, apart from those who don’t think they need interpreting and don’t want it, there is integral interpreting. This is when clients think they need interpreting and really see the value of it for the future of their business or organisation. These clients see us as partners and want to work with us to make sure their event is a success.

The most important thing to learn from this diagram is that different clients want different things and so are likely to make different buying decisions. Clients will always prioritise the success of their event or company over our careers. It is when they think the success of their event relies on close partnership with interpreters that they will buy our services.

To complicate matters, there are several possible solutions people can use when they need to understand someone who speaks another language. A few years ago, you were limited to interpreters, random strangers or phrasebooks. Nowadays people can choose from phone, remote and in-person interpreting, live-subtitling, and speech-to-text interpreting from humans, as well as apps, platforms, earpieces and automated subtitles, all using some kind of machine interpreting.

In addition, professional interpreting of any kind has become more complex and the choices on offer have multiplied, with varying prices, strengths, weaknesses, potential and audiences. Like it or not, we now work in crowded marketplaces. So what will it take to thrive?

Practical steps we can take

If we are no longer the only solution then we can’t afford to sell ourselves as if we were. Selling ‘accurate interpreting’ is now like selling a car and boasting that it has an engine. We can also no longer afford to sell ourselves as being so good it’s as if we weren’t even there. The people who want us to be invisible are the incidental interpreting clients, who are already thinking of moving over to machine interpreting.

Instead, we need to talk more about the differences we make. What intelligent decisions do we make that machines can’t? What is the difference in terms of results between working with a human and working with a machine? We need to have answers to those questions that are convincing to clients.

We also have the possibility of using technologies to create better interpreting environments. How can we use machine interpreting and other AI technologies to improve the services we offer? Can we find ways to use AI to speed up or improve our preparation or to catch errors?

Finally, it does seem as if we need to think about where we can specialise. For some, their specialism might be a rare language combination. For others, it might be some kind of meeting or domain. We already have specialist medical conference interpreters. Might we end up with interpreters specialising in meetings to do with law? Might we have specialist manufacturing interpreters or business negotiation interpreters or church interpreters, or specialists in construction or high technology?

When we are not the only option, and especially when remote simultaneous interpreting (RSI) platforms are selling machine interpreting too, we need to give buyers a compelling reason to choose us. Having some kind of specialisation might give us the insight to know what extra information will convince clients as well as creating better interpreting.

These changes can’t be made overnight. Some interpreters might not manage to adjust. It is hard to specialise when most of your income goes on bills, food, light and heat. I know of colleagues who have left the sector. Honestly, I don’t blame them. There are lots of challenges for those of us who stay. We simply won’t manage on our own; we need to work together to support and encourage each other. Interpreting has just become much more competitive. We will only survive if we learn to cooperate with each other and not compete.

Notes

1 ‘Pro Interpreters vs. AI Challenge: Who translates faster and better?’, Wired; https://cutt.ly/3wVvRAY8

2 Downie, J (2016) ‘Stakeholder Expectations of Interpreters: A multi-site, multi-method approach’. Unpublished PhD dissertation, Edinburgh: Heriot-Watt University

Dr Jonathan Downie is an interpreter, researcher and speaker. He is the author of Interpreters vs Machines and Multilingual Church: Strategies for making disciples in all languages.

This article is reproduced from the Spring 2024 issue of The Linguist. Download the full edition here.

More

The Chartered Institute of Linguists (CIOL), Incorporated by Royal Charter, Registered in England and Wales Number RC 000808 and the IoL Educational Trust (IoLET), trading as CIOL Qualifications, Company limited by Guarantee, Registered in England and Wales Number 04297497 and Registered Charity Number 1090263. CIOL is a not-for-profit organisation.